Quick Sumarry of Datawarehousing Environment Assesment

• A datawarehousing environment assessment is the essential first step to identifying source systems target databases and middleware requirements.

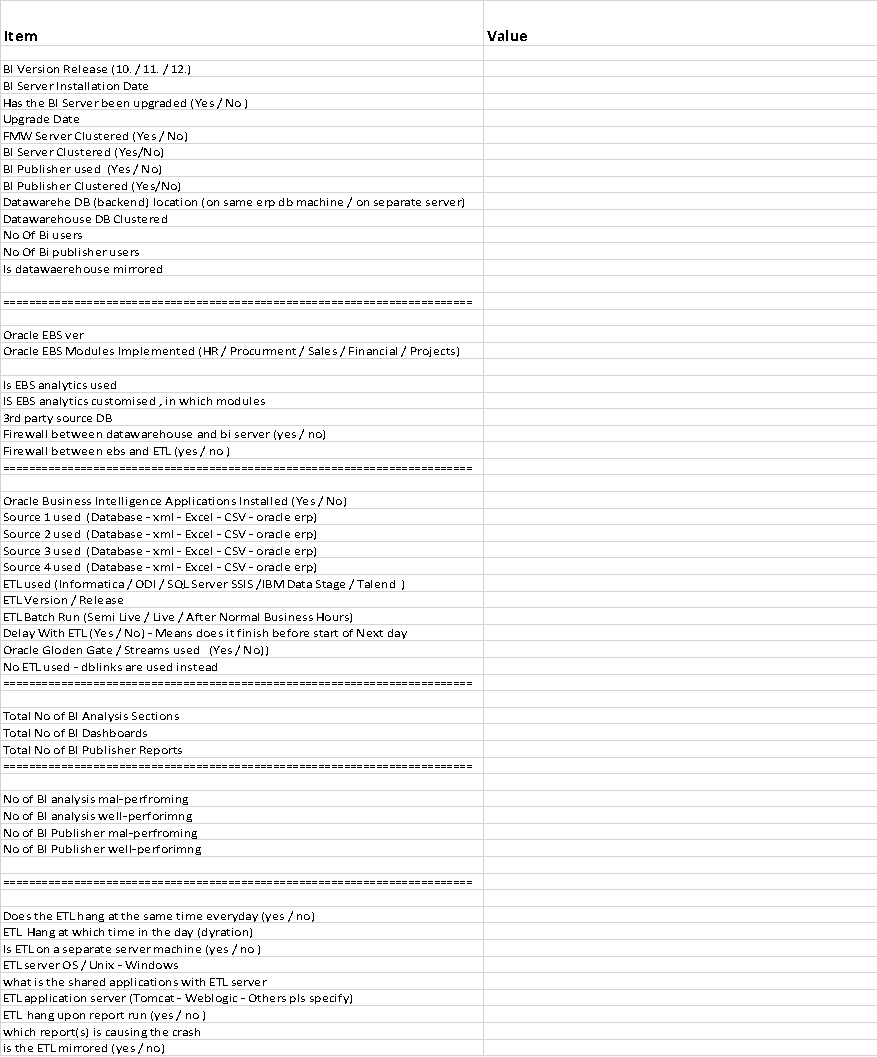

• A structured evaluation using questionnaires helps technical teams map architectures and estimate project scope accurately.

• By auditing components like ETL tools and reporting layers early organizations can prevent infrastructure bottlenecks and ensure seamless data integration across the enterprise.

Introduction:

In order to get more about your future client environment , you need to do a Datawarehousing Environment Assesment / questionnaire that sheds some light on it.

Oracle data warehousing environment , normally, would contain :

-Source database(s)

-Target database(s)

-ETL tool(s) , Extract – Transport/Transform, Load (Ex. Oracle Data Integrator ODI)

-Data warehousing Database

-Business Intelligence Tool(s)

-Reporting Tool

-Middleware Server Tool (ex. Oracle fusion Middleware)

While the client has more to tell, some important points can be missed .

The embedded excel sheet below table can help you to get an initial overview about that environment.

You may click it for editing purpose.

It is worthy to take care that the more questions got answered, the more you can estimate what the architecture is.

The Excel file can be downloaded from this link :

People Also Asked (FAQs)

1.Why is a datawarehousing environment assessment necessary for enterprises?

A datawarehousing environment assessment identifies architectural gaps before deployment. Research shows that poor data quality costs businesses approximately 600 billion dollars annually. By conducting a formal audit of source systems and ETL tools, organizations can mitigate these financial risks and improve system reliability by up to 40 percent in the first year.

2.What are the key components evaluated during a data warehouse audit?

A standard assessment typically evaluates five core areas: data storage, ETL pipelines, metadata management, access tools, and security protocols. According to 2025 industry benchmarks, ensuring these five components are optimized can lead to a 25 to 30 percent reduction in labor and manufacturing costs through better automation and resource allocation.

3.How many questions should a data warehouse assessment questionnaire include?

A comprehensive questionnaire typically consists of 25 to 60 technical questions. Using a structured worksheet reduces discovery time by nearly 50 percent. This method prevents the common 28 point gap between executive performance expectations and the actual system reality often seen during peak data processing periods.

4.What is the projected ROI of performing a data warehouse environment assessment?

The global data warehousing market is projected to reach 51.18 billion dollars by 2028, growing at a 10.7 percent CAGR. Organizations that perform regular environment assessments are 25 percent more likely to stay within budget and complete migrations on schedule compared to those that skip the discovery phase.

5.How is the maturity of a data warehousing environment measured?

Maturity is measured on a scale of 1 to 5, covering technical infrastructure and organizational processes. Statistics indicate that 80 percent of organizations conduct these assessments to reach a maturity level of at least 3.5, which is the threshold required for successful enterprise-level cloud migrations and data integration.

6.What are common risks identified during an environment assessment?

The most frequent risks include inaccurate data mapping and insufficient hardware scaling. Data from 2024 peak season reports show that while 70 percent of leaders feel confident, only 42 percent actually achieve successful system performance. Proper assessments help bridge this gap by identifying performance bottlenecks in middleware and target databases.

7.How does a cloud-based data warehouse assessment differ from on-premises?

Cloud assessments focus on vertical and horizontal scalability and performance-per-dollar metrics. Modern benchmarks for platforms like Snowflake and BigQuery suggest that optimized environments can handle terabyte-scale transformations while maintaining sub-second latency. Assessments ensure that cloud licensing costs do not exceed the planned budget by identifying redundant data sets.

8.What role does metadata play in a datawarehousing environment assessment?

Metadata management is a critical success factor for 65 percent of modern architectures. Assessments verify technical and business metadata to ensure data lineage is clear. Without this verification, organizations often face a 20 percent increase in scope creep due to a lack of understanding of where data originates.

9.Which performance metrics are prioritized in a data warehouse evaluation?

Performance metrics include query latency, user concurrency, and ETL throughput. For high-performing systems, the goal is often an average query response time of under 2 seconds. Regular assessments allow teams to tune these metrics, which can increase end-user adoption rates by approximately 40 percent across the business.

10.How long does a typical datawarehousing environment assessment take?

A standard architectural assessment can take between 2 and 6 weeks, depending on the number of source systems. By identifying legacy systems for decommissioning, an assessment can uncover 15 to 20 percent in potential cost savings. This timeline is essential for establishing a quantitative baseline for future system performance.